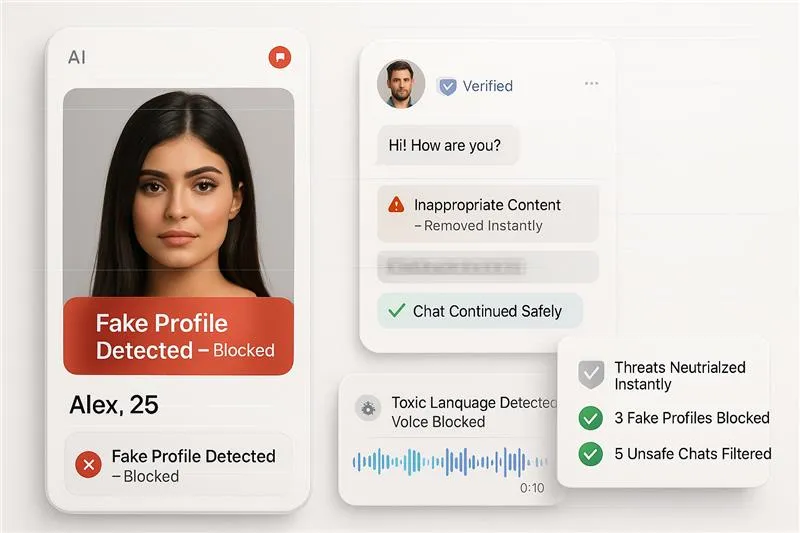

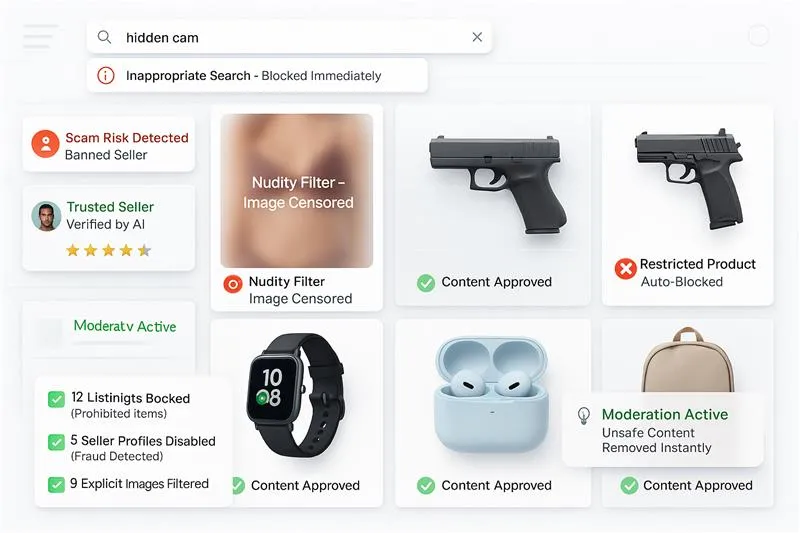

IMAGE MODERATION

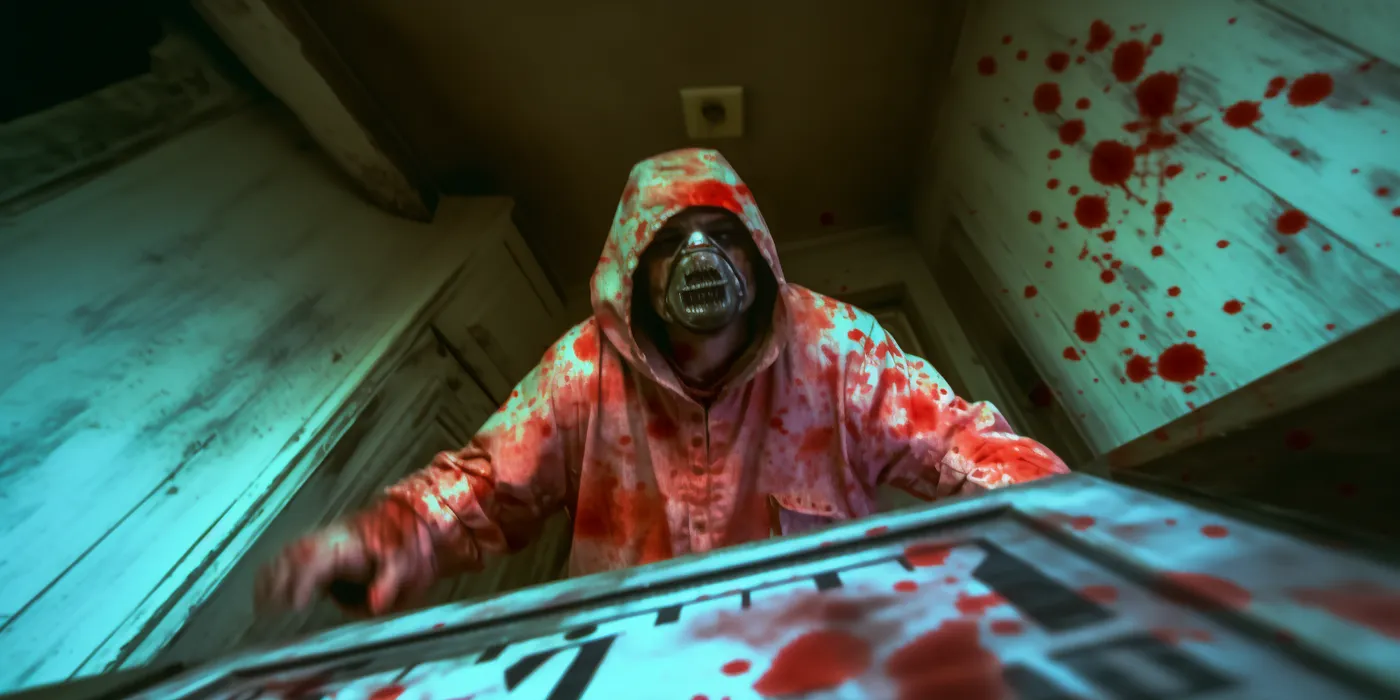

Stop Nudity, Violence, Hate & Deepfakes in Images with Real-Time AI Moderation.

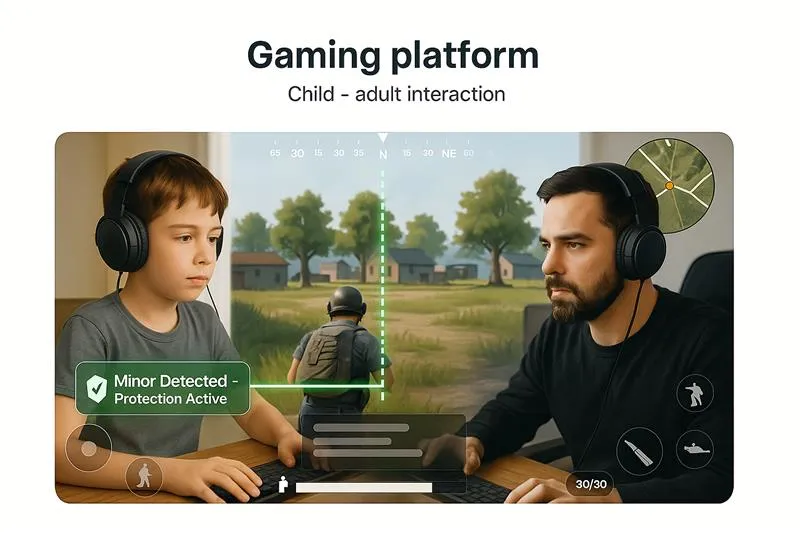

Mediafirewall.ai uses AI image moderation to scan every photo in real time for nudity, sexual content, violence, and unsafe visuals. It auto-flags and blocks risky images before they go live on your app or website. Built for AI image moderation for dating apps, social platforms, and marketplaces to cut manual review and protect users.