VIOLENCE DETECTION FILTER

VIOLENCE DETECTION FILTER

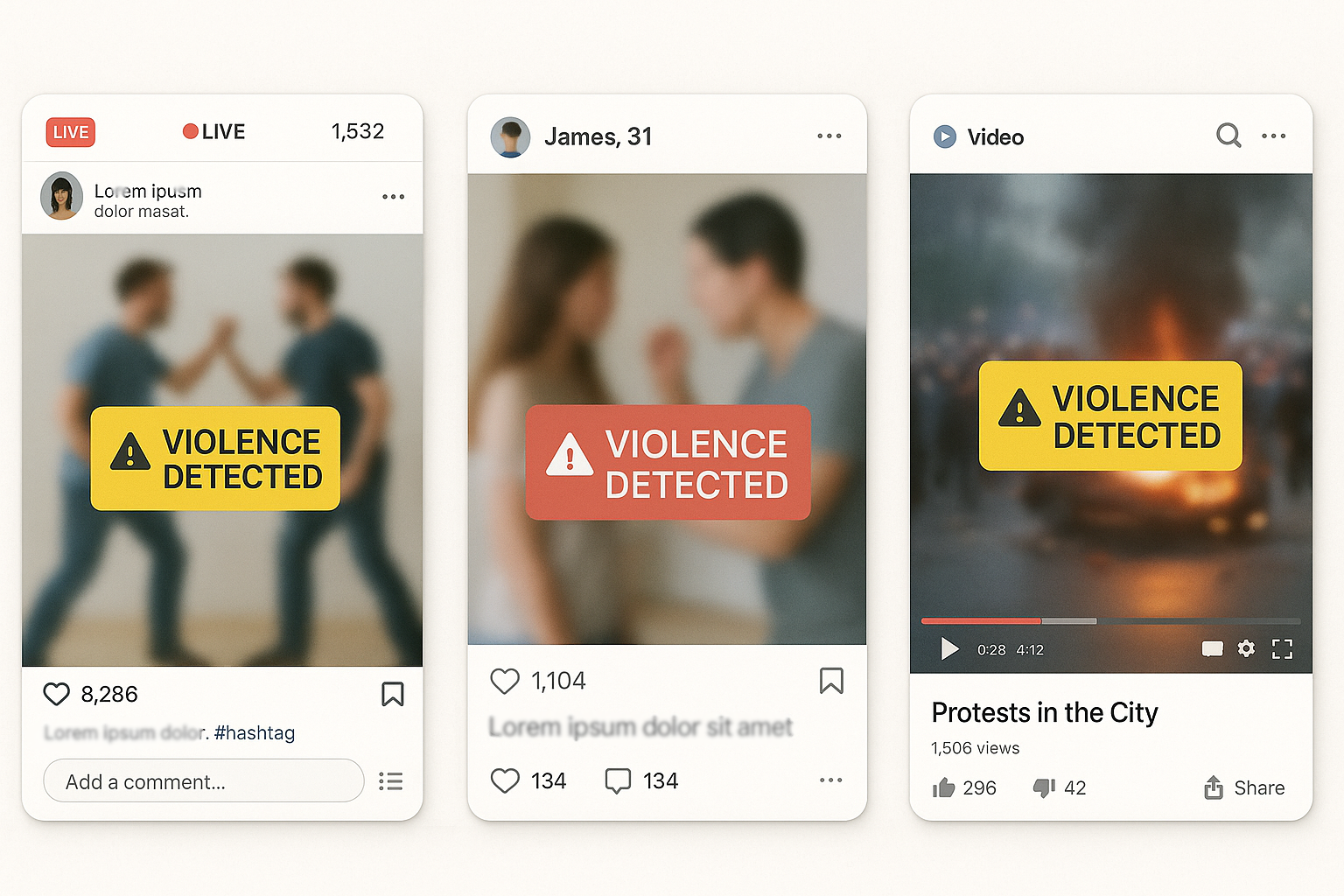

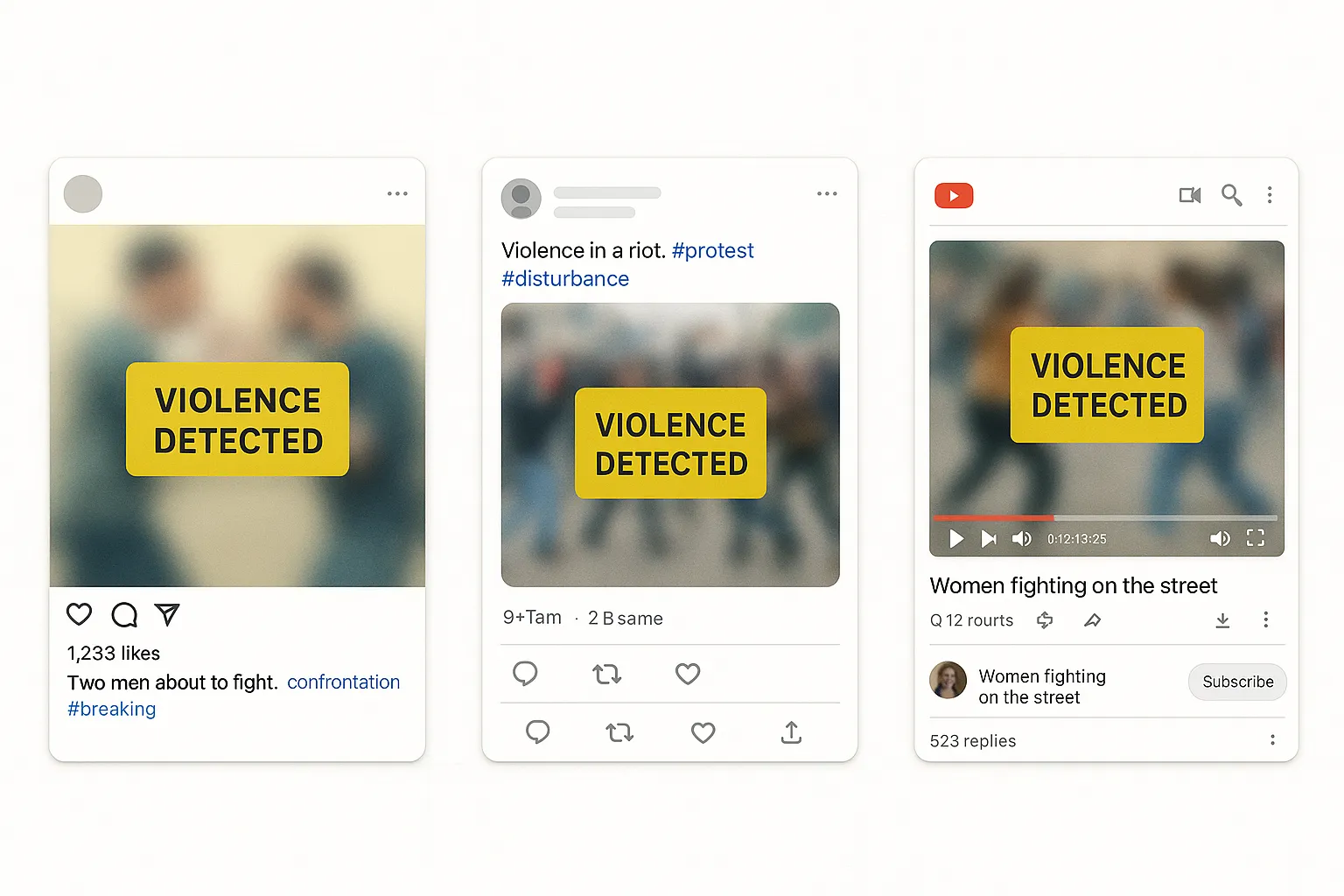

Stop Graphic Violence, Terror Footage & Weapon Imagery with Real-Time AI Moderation

Mediafirewall AI detects blood/gore, assaults, animal cruelty, medical trauma, and war/terror scenes across images and video. We scan frames and thumbnails pre-visibility, blocking shock content, executions, and mass-casualty footage before users see it. Weapon and crime-scene cues are flagged instantly, with policy-aware, audit-ready decisions. Protect users and brand safety while aligning with global compliance requirements.

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What Is the Violence Detection Filter?

Block Gore

Flags blood, open wounds, mutilation, and corpse imagery (blurred or unblurred). Prevents shock-bait uploads and traumatic exposur... Read more

Stop Assaults

Detects fights, torture, and real/simulated beatings even in low-light or shaky footage.Enforces pre-visibility gates on lives, up... Read more

Terror Scenes

Identifies bombings, executions, hostage footage, and mass-shooting clips.Limits spread of extremist violence and copycat risk.

Medical Trauma

Catches graphic surgeries, amputations, severe burns, infection/deformity shock posts. Routes to age-gating/warnings or blocks per... Read more

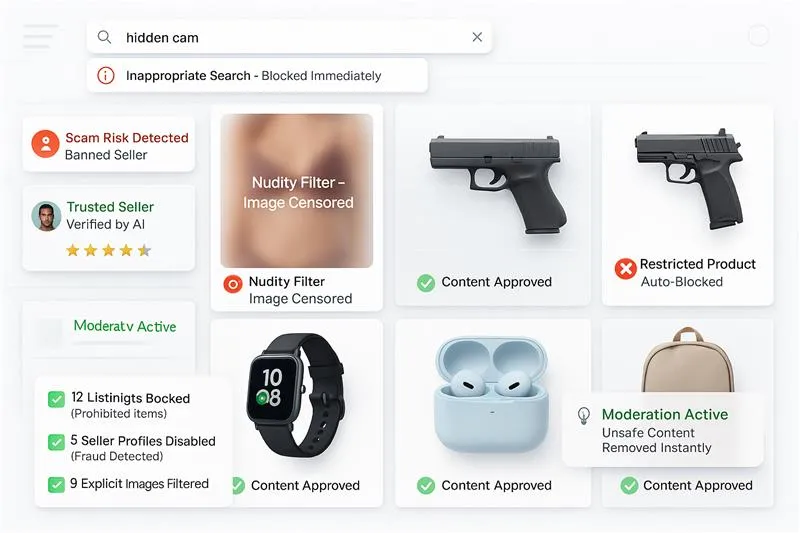

Weapons & Crime

Recognizes guns, blades, and crime-scene cues; scans thumbnails and overlays. Blocks glamorized violence and dangerous tutorials.

How our Moderation Works

Mediafirewall’s AI Violence Detection Filter reviews each uploaded image, video, or livestream frame using deep visual estimation and motion tracking. If violence or threat behavior is detected based on pre-configured thresholds, the content is automatically blocked, blurred, or escalated. Enforcement is instant, autonomous, and tuned for each platform’s policy requirements.

Why Mediafirewall’s Violence Detection Filter?

When synthetic content is indistinguishable from reality, trust becomes non-negotiable. This filter delivers more than detection; it gives enterprises control over authenticity in every format.

Policy-Driven Visual Safety

Stop graphic content at the point of upload. Enforce visual safety with no delay... Read more

Livestream Protection Without Lag

Intercept violent acts in real-time, whether it's a game stream, video call, or ... Read more

Platform-Ready for Diverse Environments

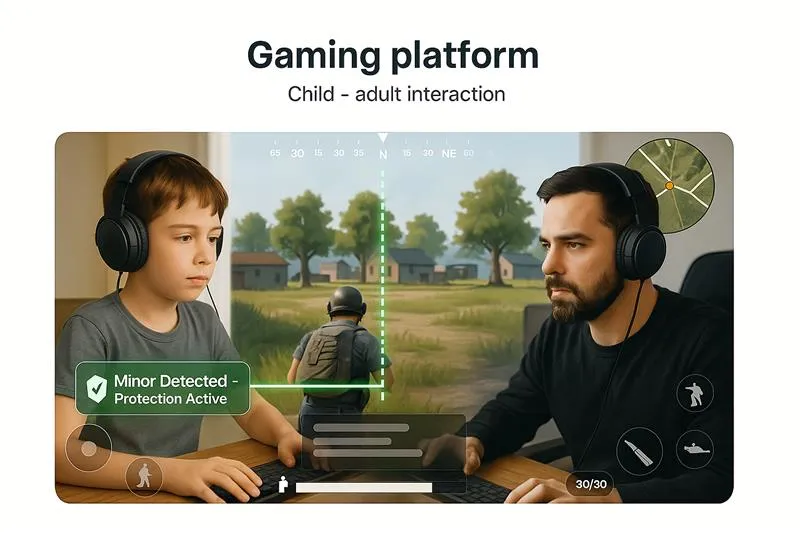

Whether you're protecting buyers on ecommerce platforms or students in education... Read more

Regulatory and Brand Risk Compliance

Stay intact with safety regulations and liability for automated, auditable, and ... Read more

Related Solutions

Violence Detection Filter FAQ

Mediafirewall AI analyzes frames, motion, and audio cues to detect gore, assaults, weapons, and terror scenes; it returns boolean, policy-linked decisions before visibility.

Because graphic media causes trauma and normalization of violence; pre-publish gating prevents exposure, virality, and regulatory risk.

At ingest (uploads/thumbnails), on edits (captions, previews), and continuously in livestreams with sub-second decisions.

In thumbnails, quick cuts, background screens, lower-thirds, and props; the filter scans video frames, images, and text-on-image together.

Viewers, creators, advertisers, and trust & safety teams safer feeds, fewer appeals, and audit-ready proof of enforcement.

Context rules can route qualifying content to age-gates, interstitial warnings, or limited visibility, rather than removal.

Yes guns, knives, spent shells, blood pools, body bags, and tape markers are flagged with scene-context checks.

Our screenshot-detection filter, used with face verification, spots screen-captured images during profile checks helping ensure the face is original, live, and not a screenshot.