FACE MATCH FILTER

FACE MATCH FILTER

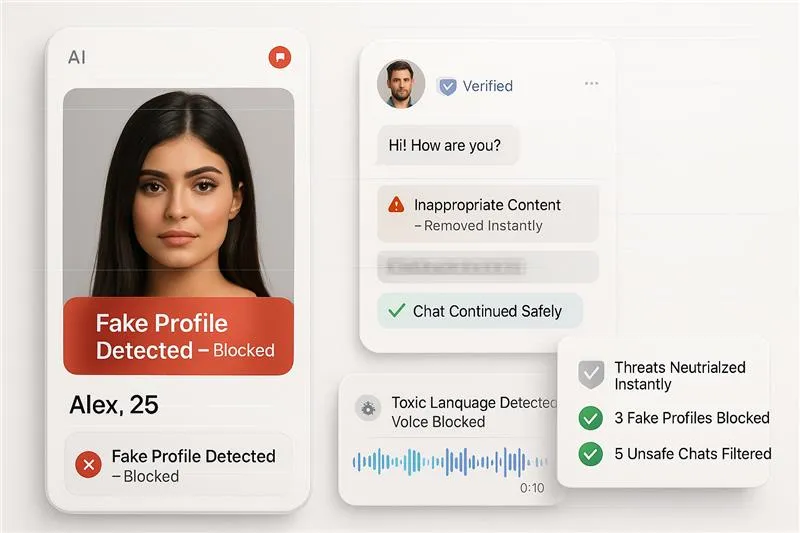

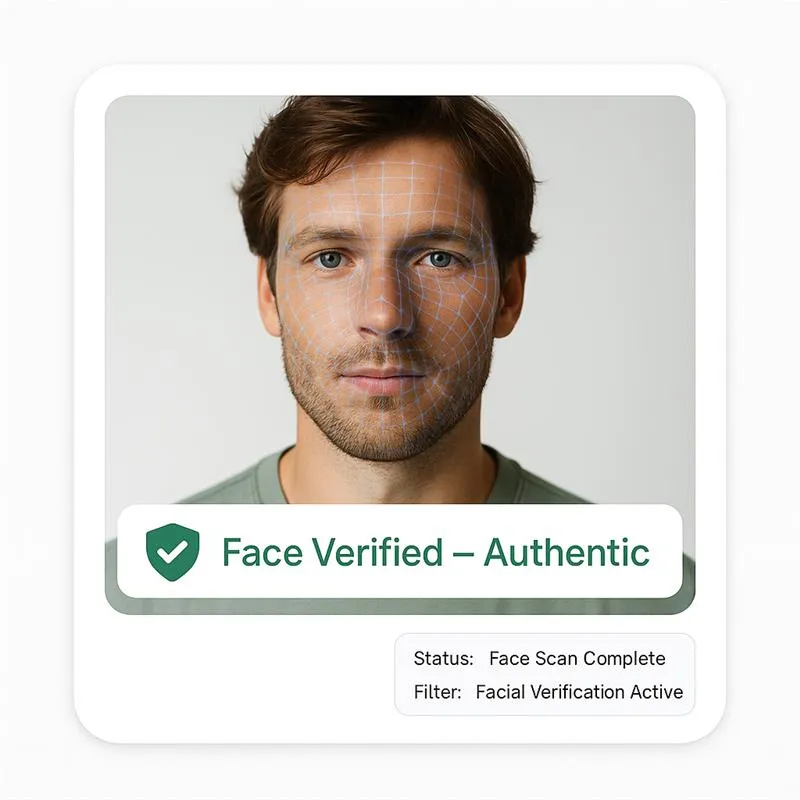

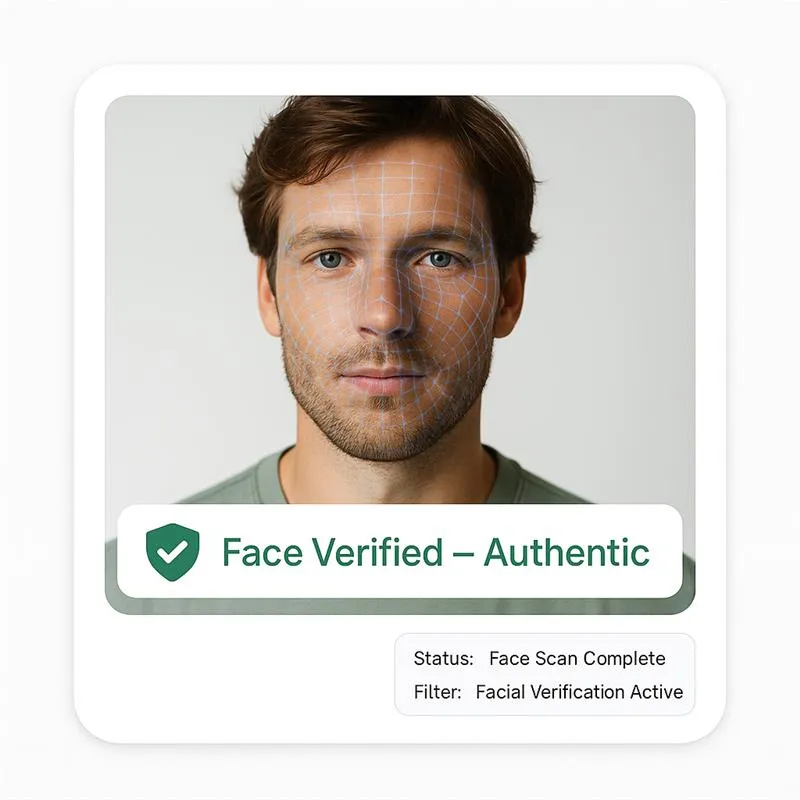

Stop Duplicate Faces, Catfishing & KYC Failures with Real-Time Face Matching

Mediafirewall AI’s Face Match Filter finds the same face reused across accounts and blocks fake profiles. We match a user’s selfie (live or static) to their ID photo for fast, accurate KYC/identity validation. Face reuse, stolen images, and profile takeovers are flagged before profiles go live. Protect users, cut romance scams, and keep onboarding clean with audit-ready decisions.

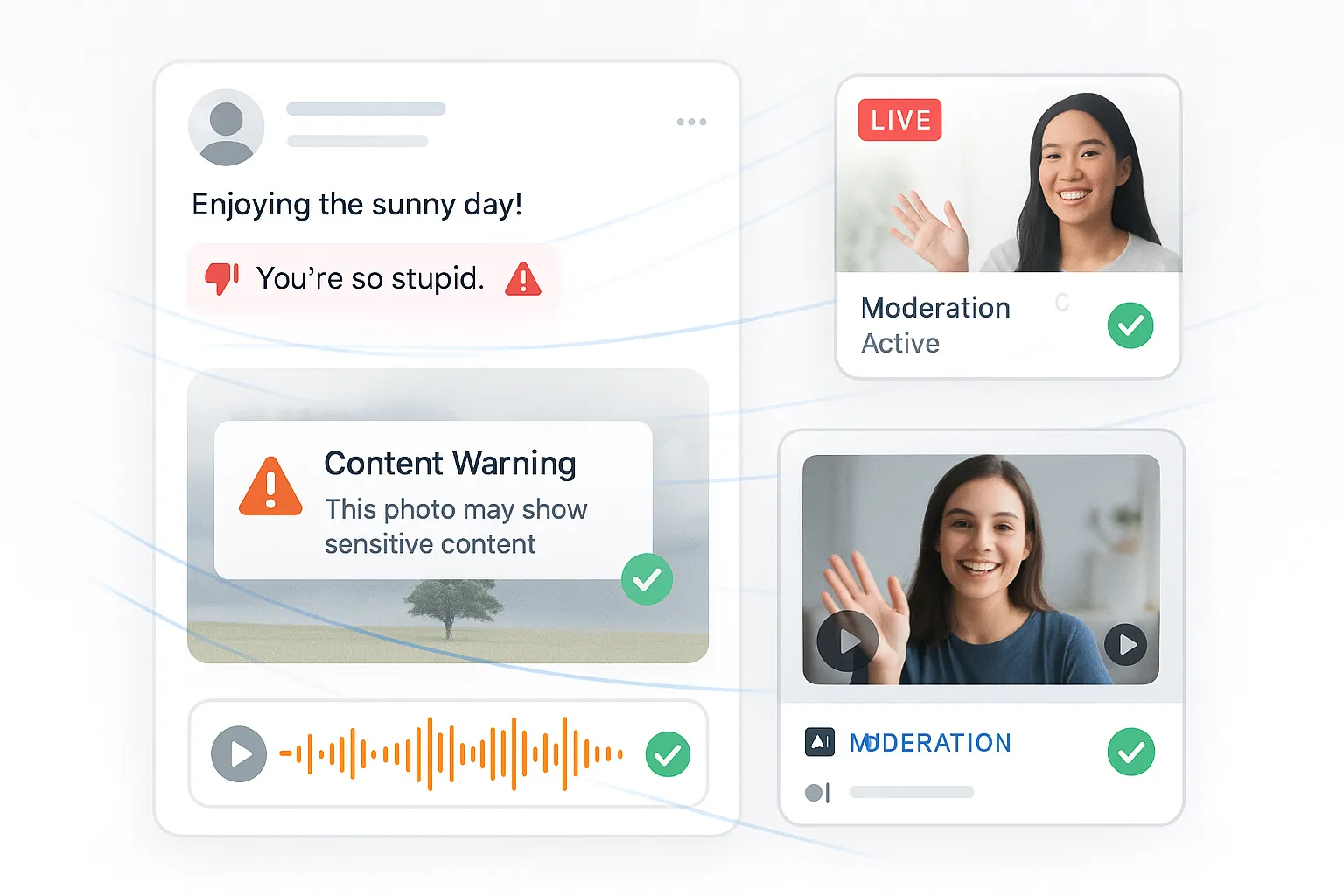

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What is Face Match Filter

Duplicate-Face Catch

Detects the same face across many accounts (crops, filters, angle changes).Stops romance scams, catfishing, and fake network farms... Read more

KYC Match (Selfie↔ID)

Compares live/static selfie to government ID portrait.Confirms the person onboarding is the person on the ID.

Stolen Photo Defense

Spots web-scraped, celebrity, or stock headshots used as avatars.Blocks screenshot uploads and recycled gallery images

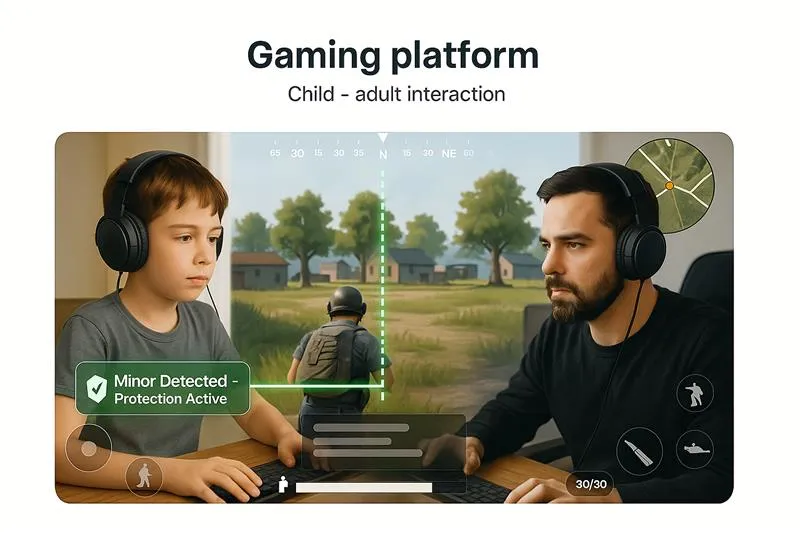

Liveness & Spoof Guard

Checks blink/motion cues and texture to defeat masks and screen replays.Reduces false accepts during verification.

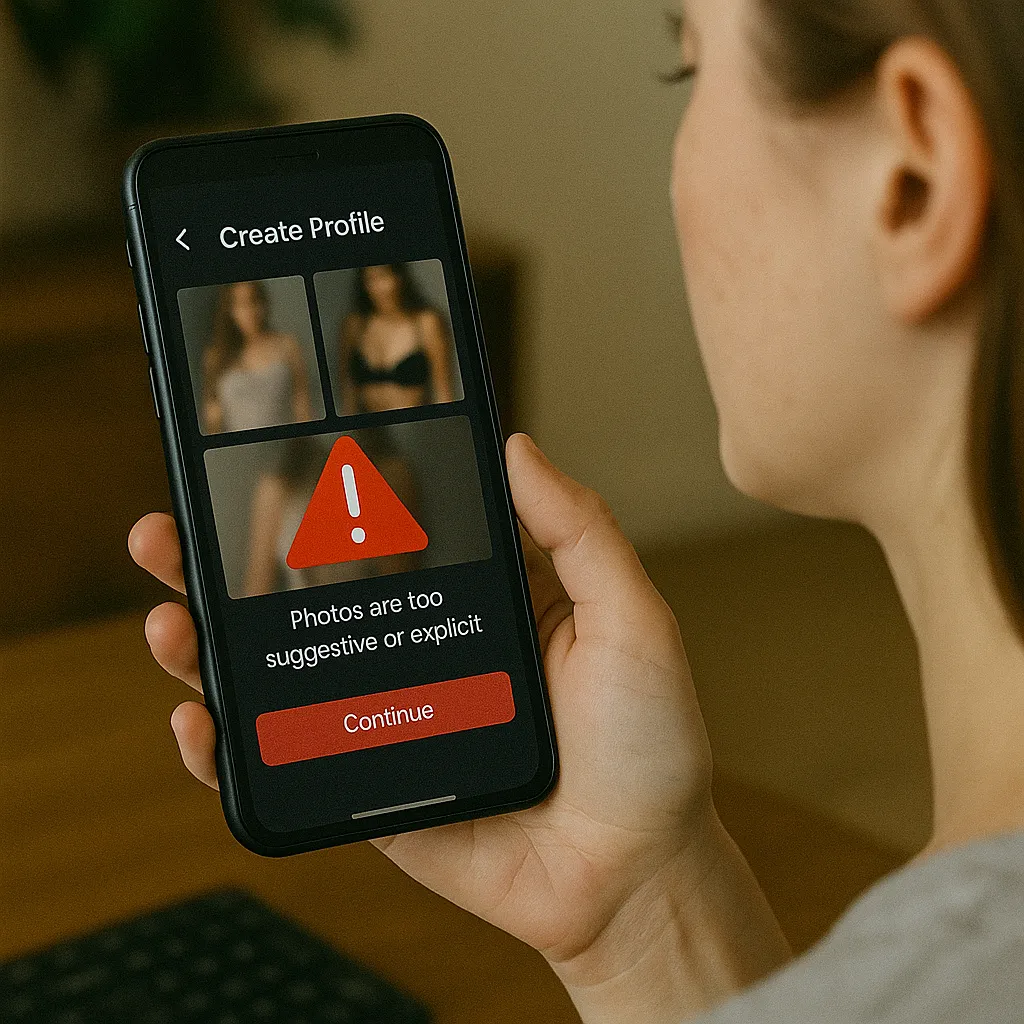

Pre-Visibility Control

Enforces Allow / Recapture / Block / Escalate with clear reasons.Logs evidence for audits, appeals, and compliance.

How our Moderation Works

AI scans faces instantly to verify identity and block impersonation.

Why use MediaFirewall.ai's Face Match Filter

Simply put, our filter is the best value for money out there

Operational Efficiency at Scale

Automate face verification across millions of uploads and streams, slashing manu... Read more

Real‑Time Livestream Verification

Identify and block impersonators or unverified faces in live sessions with zero ... Read more

Seamless Platform Integration

Deploy quickly via API or SDK. Customizable confidence thresholds and region‑spe... Read more

Built for Policy‑Driven Enforcement

Apply nuanced identity verification policies across geographies, age groups, and... Read more

Related Solutions

Face Match Filter FAQ

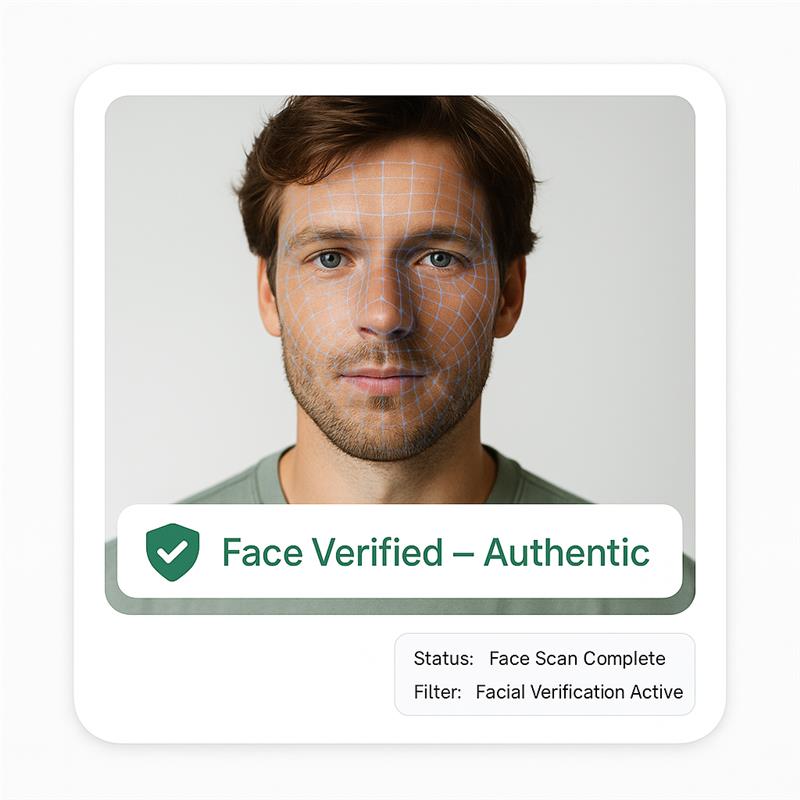

It verifies a user’s face, finds duplicate faces across profiles, and matches selfie to ID to stop fake accounts.

The system compares facial landmarks, ratios, and texture between the selfie and the ID photo, then returns a confidence score with a clear action.

Yes. It tolerates crops, filters, lighting, and small edits to find duplicate-face reuse across the platform.

Liveness checks (blink, micro-movement) and spoof cues (screen glare, moiré, print artifacts) flag screenshots and masks.

At onboarding, on photo changes, and during re-verification. High-risk reports can trigger instant rechecks.

Yes. It supports KYC/identity validation, reduces fraud, and creates audit-ready logs for compliance.

The system can ask for a recapture and gives simple tips (remove mask, better light, face centered) to pass checks.

Decision, reason, timestamp, and evidence snapshots with minimal retention, aligned to privacy and platform rules.