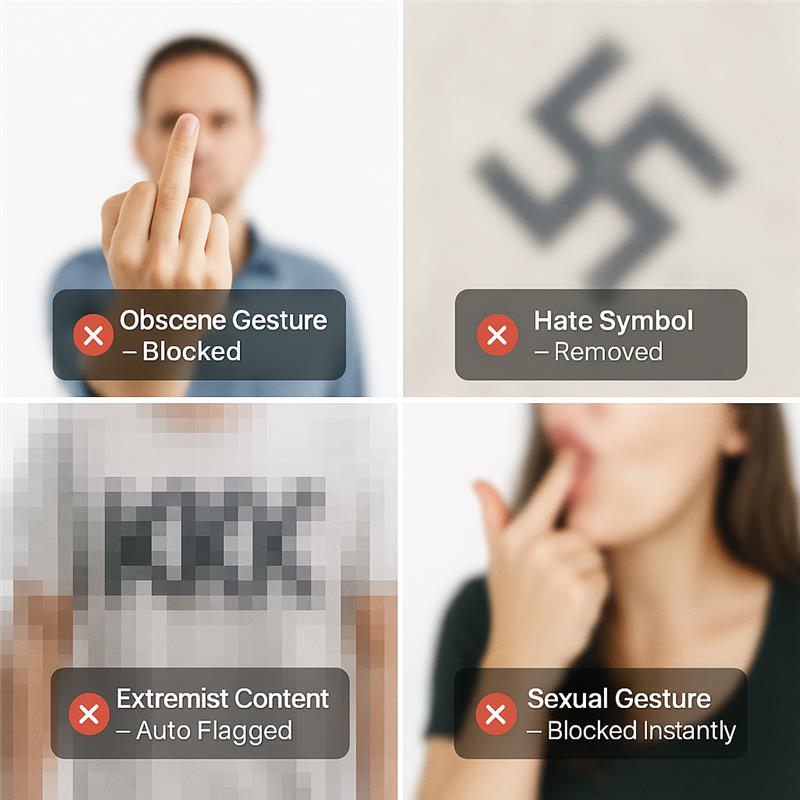

OBSCENE GESTURES AND HATE SYMBOLS FILTER

OBSCENE GESTURES AND HATE SYMBOLS FILTER

Stop Obscene Gestures, Hate Symbols & Extremist Cues on Dating Apps with Real-Time AI

Mediafirewall AI detects obscene hand signs, sexualized gestures, and recognized hate symbols across photos, profiles, and short-form video. We flag Nazi-style salutes, coded “OK” hate signs, gang signs, and lewd body language before visibility.Thumbnails, stickers, tattoos, patches, and background flags are scanned frame-by-frame. Protect users, uphold brand safety, and meet policy/compliance standards with audit-ready enforcement.

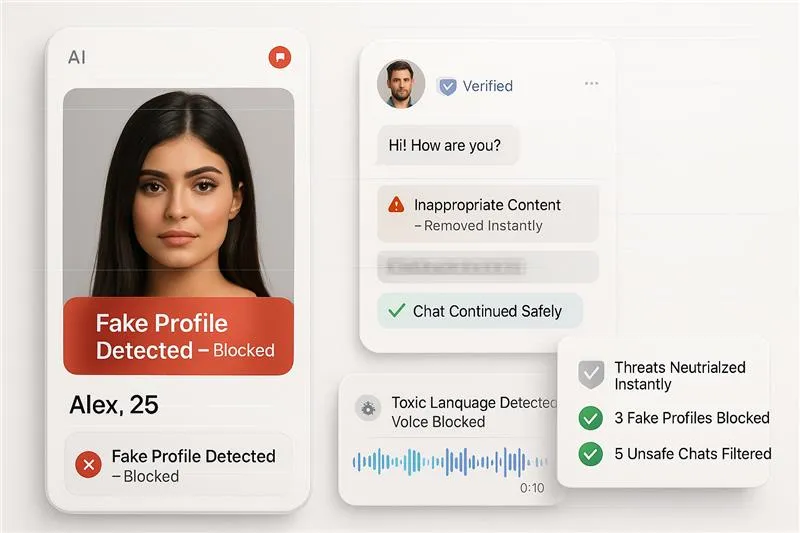

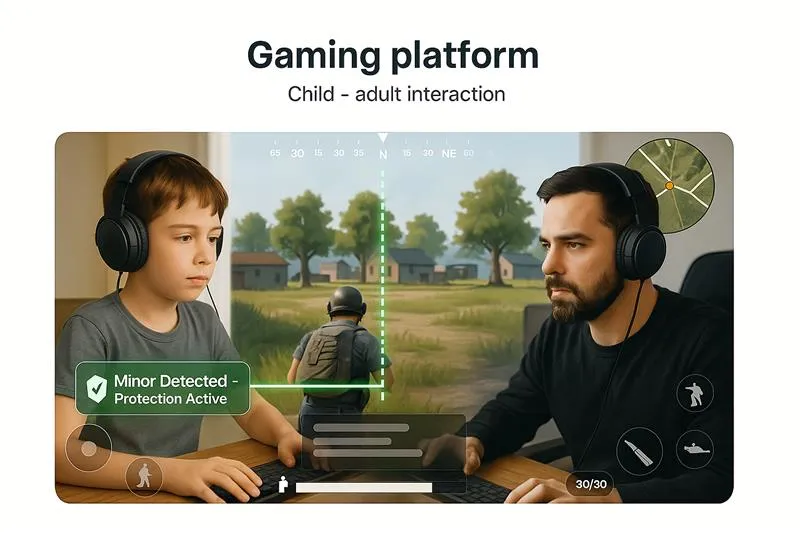

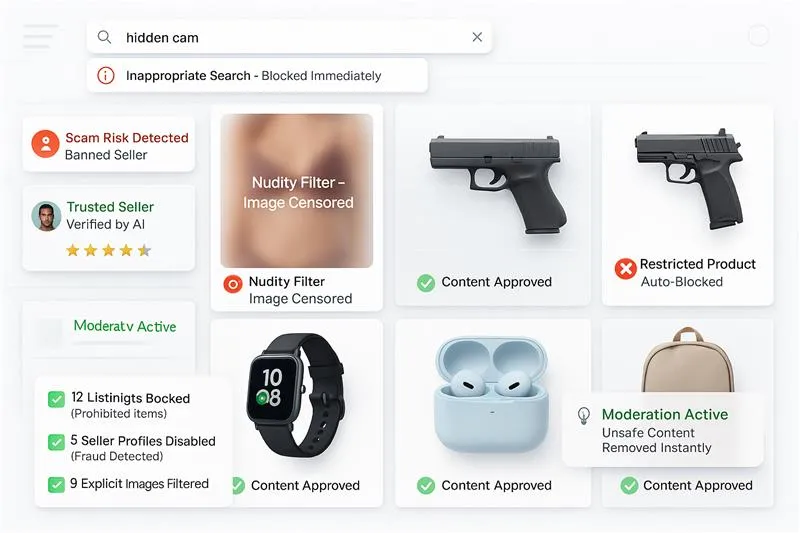

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What Is the Obscene Gestures and Hate Symbols Filter?

Gesture Guard

Detects middle-finger, thrusting/masturbatory motions, licking cues, and innuendo trends in reels/lives. Blocks sexualized, youth-... Read more

Hate Sign Scan

Recognizes raised-arm salutes, Sieg Heil, coded “OK” as hate, and intimidation gang signs.Prevents the spread of extremist or viol... Read more

Insignia & Flags

Spots swastikas, SS bolts, Totenkopf, Confederate flags, and hate-group insignia on apparel/props. Works on tattoos, patches, stic... Read more

Anti-Evasion

Catches crops, color swaps, tiny overlays, mirrored frames, and background placements. OCR reads text-on-image to find coded sloga... Read more

Policy-Aware Actions

Returns clear Allow / Block / Age-gate / Escalate outcomes with reasons and JSON logs.Aligns with regional safety rules and platfo... Read more

How our Moderation Works

Mediafirewall AI’s filter reviews every uploaded image, video frame, and livestream, scans for banned gestures or symbols, uses contextual visual intelligence to eliminate false positives, and auto-enforces actions based on your predefined moderation policies, without disrupting the user journey or platform performance.

Why use Mediafirewall's Obscene Gestures and Hate Symbols Filter?

For platforms that host visual content, deepfakes aren’t just a nuisance; they’re a liability. Here’s how Mediafirewall helps enterprises uphold authenticity:

Block Visual Misconduct Before It Spreads

Flags obscene signs and hate symbols, even if disguised or partial, before users... Read more

Protect Brand in Visual-First Platforms

Safeguard credibility across images, video calls, and live streams, where visual... Read more

Regional Symbol Intelligence

Understands what’s offensive by country or context, tailored for global platform... Read more

Zero Manual Review, Continuous Precision

No queues, no lag. Detection evolves with new gestures and symbols automatically... Read more

Related Solutions

Obscene Gestures and Hate Symbols Filter FAQ

Pose estimation + motion analysis track hands/arms/tongue cues over time, flagging thrusting, licking, and middle-finger gestures before users see them.

Dating surfaces enable rapid 1:1 exposure; catching salutes, coded “OK” hate signs, and gang signs prevents harassment, intimidation, and off-app escalation.

Pre-visibility at upload and continuously during livestreams (sub-second checks), plus on thumbnail/caption edits.

On clothing patches, tattoos, stickers, room décor, posters, and tiny lapel pins; the filter scans backgrounds, thumbnails, and frames.

Users and moderators gain safer interactions; brands avoid association with obscene or extremist visuals; platforms maintain trust and app-store compliance.

Yes variant-tolerant matching handles partial occlusion, recolors, stylization, and mirrored layouts to reduce evasion.

Context rules and confidence thresholds reduce false positives; ambiguous cases can route to age-gate or escalate instead of block.

Each action includes policy references, timestamps, and evidence snapshots in audit-ready logs, supporting GDPR/DSA/COPPA-aligned workflows.