MINOR DETECTION FILTER

MINOR DETECTION FILTER

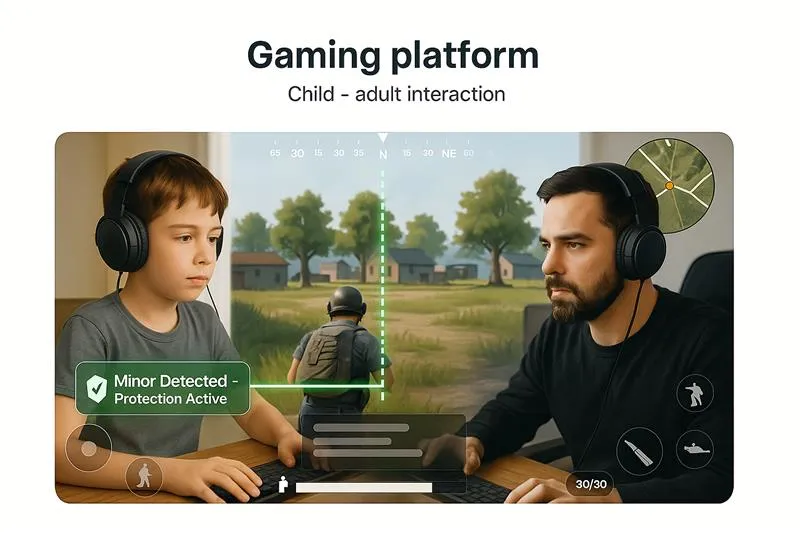

Stop Under-18 Exposure, Synthetic “Child” Media & Risky Contexts with Real-Time AI

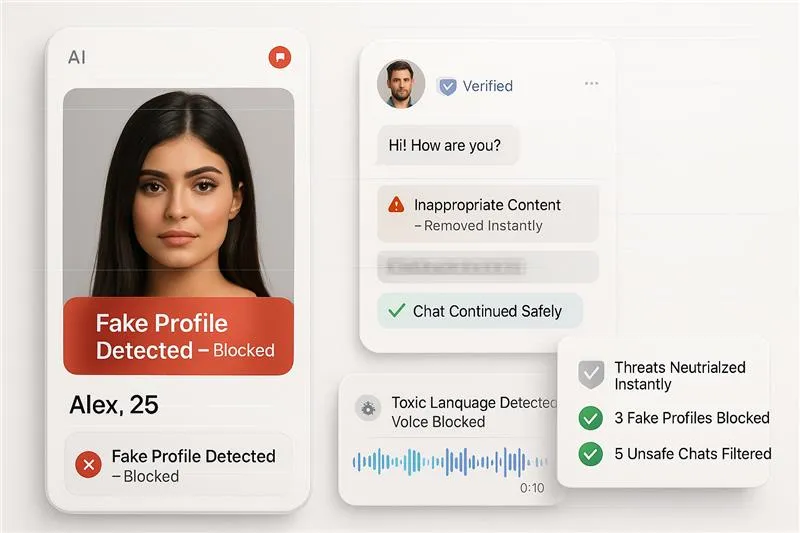

Mediafirewall AI’s Minor Detection Filter estimates age from faces and flags under-18 content across images, video, and livestreams. t detects minors in adult contexts and finds AI-generated or deepfake “child” media designed to evade rules. Context checks cover adult–child proximity and suspicious environments (school/home cams). Protect users, prevent CSAM risk, and meet COPPA/GDPR/DSA/DPDP with audit-ready actions.

Supported Moderation

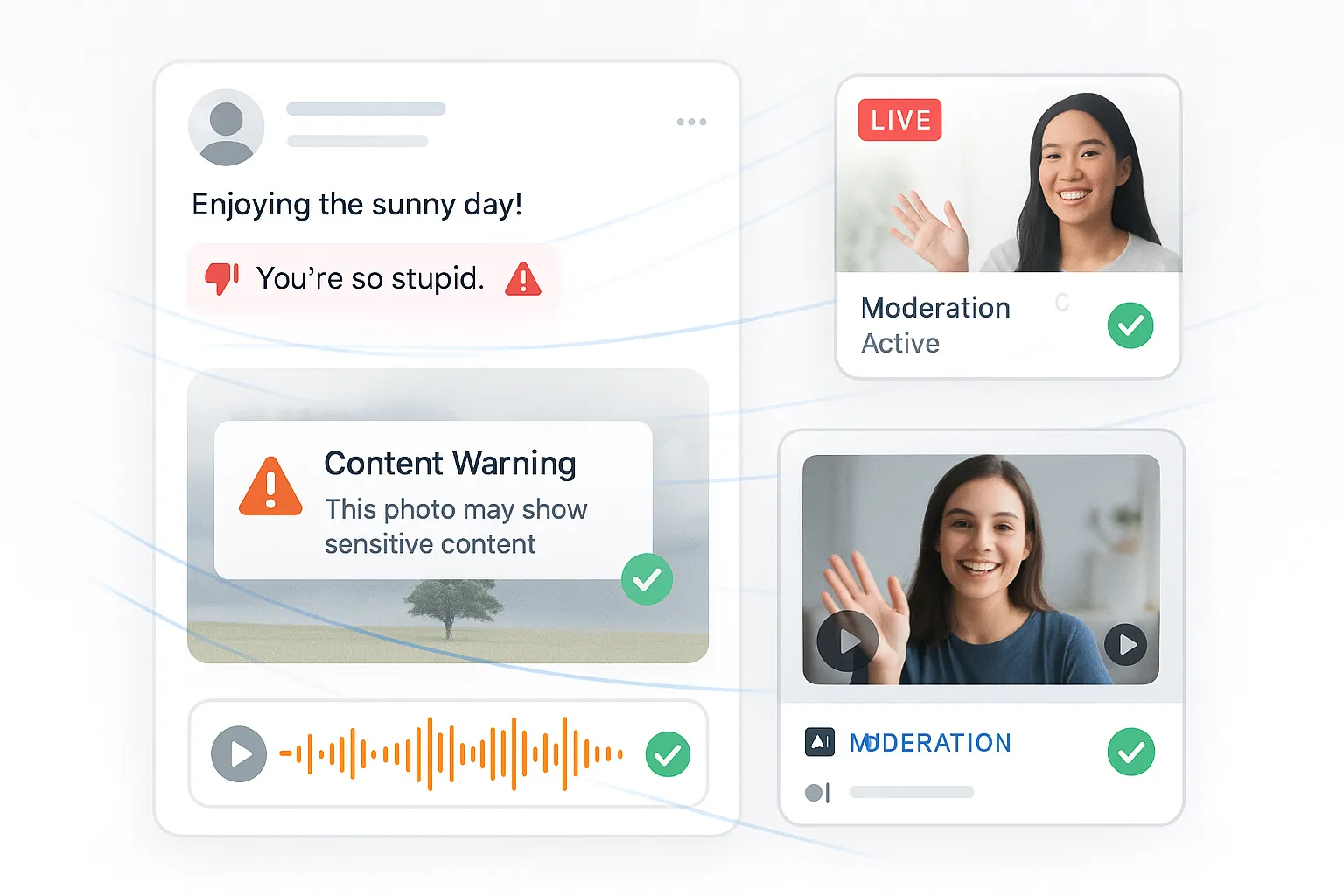

Every image, video, or text is checked instantly no risks slip through.

What Is the Minor Detection Filter?

Per-Face Age Check

Estimates under-13 / 13–17 / 18+ from facial proportions, texture, and structure. Works on single photos, group shots, and video f... Read more

Risky Context Flags

Detects minors in adult or NSFW settings, suggestive poses, or violent scenes.Routes to block / age-gate / escalate per policy.

Synthetic Minor Defense

Finds AI-generated, deepfake, or cartoon-style “child” content used to bypass filters. Prevents distribution of policy-barred medi... Read more

Profile & Metadata Checks

Catches deceptive ages, fake documents, and signals of school/home camera sources.Reduces grooming risk and under-age onboarding.

Real-Time, Multi-Surface

Enforces decisions on uploads, edits, thumbnails, and livestreams.Returns Allow / Warn / Block / Escalate with reasons and evidenc... Read more

How our Moderation Works

Using advanced visual estimation, Minor Detection Filter assesses both the content of the media and the age of the uploader. Violates pre-configured standards; immediately flagged, blocked, or escalated, without impacting user experience or requiring human review.

Why Mediafirewall’s Minor Detection Filter?

When age isn't clear, safety can't be optional. This filter goes beyond detection—empowering platforms to identify minors and enforce protection at scale.

Child-First Visual Safety

Designed to prevent accidental or malicious exposure to explicit visuals on plat... Read more

Self-Publishing Risk Prevention

Detects uploads from users visually estimated to be underage, helping platforms ... Read more

Policy Enforcement with No Manual Overhead

Works across images, recorded video, and live streams with precision rules by ag... Read more

Enterprise-Grade Compliance and Control

Trusted by platforms handling sensitive user demographics. Configurable to meet ... Read more

Related Solutions

Minor Detection Filter FAQ

It estimates age brackets for each face and flags under-18 content or risky contexts before posts are visible.

Using facial landmarks, proportions, and texture plus scene context. Results include confidence scores for your thresholds.

Yes. It gives per-face ages in group images and frame-by-frame checks in videos and livestreams.

Yes. It flags synthetic minors and manipulated faces/bodies, including cartoon-style where policy prohibits.

Pre-visibility at upload and edits, plus continuous checks in livestreams so risky media never reaches users

Policy-aware outcomes can age-gate, warn, limit reach, or escalate instead of auto-block when context allows

We store decision, reason, timestamp, and minimal evidence aligned with COPPA, GDPR/DSA, DPDP and your policy.

It prevents under-age exposure, reduces grooming risk, and supports app-store and legal compliance with audit-ready logs.