DISTURBING VISUAL FILTER

DISTURBING VISUAL FILTER

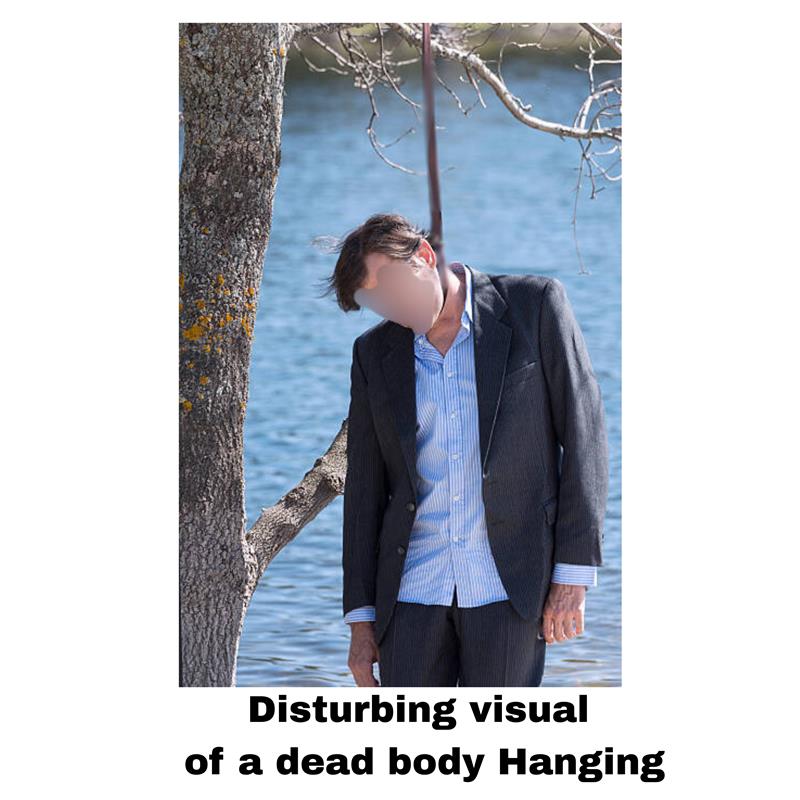

Stop Self-Harm Scenes, Corpse Imagery & Aftermath Shock Content with Real-Time AI

Mediafirewall AI’s Disturbing Visual Filter detects self-harm/suicide imagery, corpses, morgue footage, and war/disaster aftermath. We enforce pre-visibility moderation across images, video, carousels, and livestreams to prevent trauma and contagion. Policy-aware decisions block glorification, shock-bait thumbnails, and re-uploads with audit-ready logs. Protect users, uphold duty of care, and meet GDPR/DSA/COPPA-aligned safety standards at scale Shape.

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What Is the Disturbing Visual Filter?

Self-Harm Blocks

Flags razor cuts, ligatures, attempts, and tools; detects language/overlays encouraging harm.Prevents exposure and reduces contagi... Read more

Corpse Detection

Identifies bodies (blurred or not), morgue/body-bag scenes, decomposition, and dismemberment.Routes to block or restricted visibil... Read more

Aftermath Control

Detects war/disaster aftermath, mass-casualty scenes, graphic injuries, and on-scene shock media.Stops shock-bait thumbnails and s... Read more

Context & Warnings

Distinguishes news/educational context from gratuitous harm; can apply age-gates and interstitials.Links every decision to documen... Read more

Anti-Evasion Guard

Catches partial crops, color shifts, tiny overlays, memes, and coded captions/hashtags.Works on uploads, edits, and frame-by-frame... Read more

How our Moderation Works

Mediafirewall’s Disturbing Visual Filter evaluates each visual submission, image, video, or livestream frame, using deep pattern and sentiment analysis. Recognizes psychological triggers and distressing elements like deformity, gore, or unnatural movement.

Why Mediafirewall AI’s Disturbing Visual Filter?

Stop graphic shocks before they surface — our AI filter blocks disturbing visuals in every format.

Proactively Protects Mental Well-Being

Filters deeply unsettling content, beyond violence or nudity before it can traum... Read more

Designed for Subtlety

Screens for indirect threats: creepy imagery, grotesque bodies, graphic wounds, ... Read more

Zero Review Bottlenecks

Fully autonomous. Avoids human escalation queues and reduces mental toll on cont... Read more

Enterprise-Class Safety & Control

Whether you’re an education portal or a dating app, the filter adapts sensitivi... Read more

Related Solutions

Disturbing Visual Filter FAQ

It analyzes pixels, motion, and text-on-image to detect self-harm, corpse imagery, morgue scenes, and aftermath visuals, returning Boolean, policy-linked actions before visibility.

Because exposure can traumatize audiences and increase suicide contagion; pre-publish gating stops harm and limits virality.

At upload and on thumbnail/caption edits, plus continuous scanning in livestreams with sub-second decisions.

In thumbnails, carousels, background props, cropped frames, and text overlays; the filter scans media and embedded text together.

Users, creators, and safety teams safer feeds, fewer appeals, stronger brand safety, and measurable reduction in traumatic exposure.

Yes. Context-aware rules can age-gate, warn, or limit reach for journalistic or PSA content instead of outright removal.

Anti-evasion checks detect crops, recolours, mirrored frames, meme wrappers, and edited captions/hashtags designed to slip through.

Mediafirewall AI provides audit-ready logs and minimal data retention aligned with GDPR/DSA/COPPA, mapping each action to your policy.