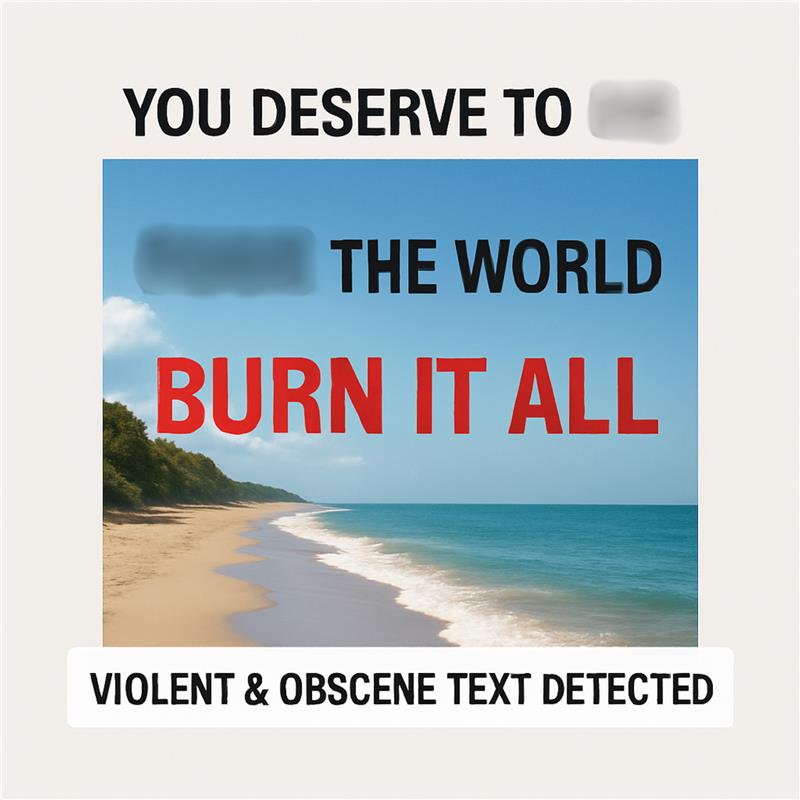

VIOLENT AND OBSCENCE TEXT ON IMAGE FILTER

VIOLENT AND OBSCENCE TEXT ON IMAGE FILTER

Stop Obscene Words, Slurs & Violent Threats in Images with Real-Time AI

Mediafirewall AI detects bad words, slurs, and violent threats embedded in images and memes. We read tiny, stylized, or masked text on shirts, signs, and backgrounds before it goes live. The filter blocks sexual/obscene language and calls for harm or suicide in uploads and thumbnails. Protect users, prevent abuse, and stay compliant with clear, audit-ready actions.

Supported Moderation

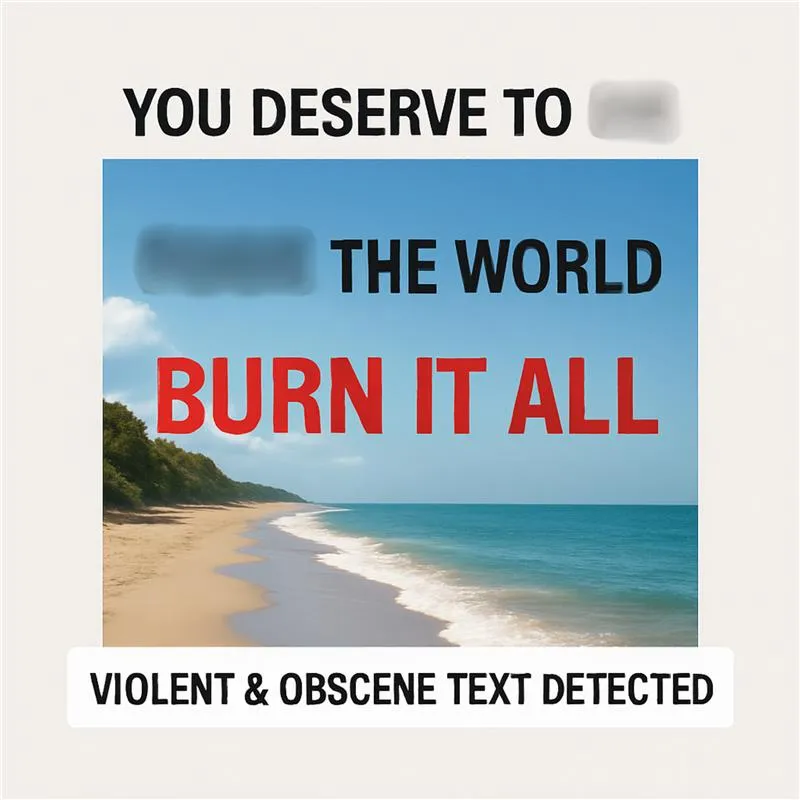

Every image is scanned for harmful text—nothing abusive makes it through.

What is the Violent and Obscence Text on Image Filter?

Find Hidden Text

OCR reads small, curved, or distorted fonts plus leetspeak (f*ck, sh!t) and symbol swaps. Catches text others miss in memes, stick... Read more

Block Obscenity

Detects sexual terms, slurs, and vulgar insults printed on clothing, posters, or props. Keeps profiles and feeds clean and brand-s... Read more

Stop Threats

Flags “kill,” “die,” “blow it up,” and other violent calls to action wherever they appear. Prevents harm messaging and self-harm e... Read more

Context Aware

Understands protest photos, deepfake posters, and visual rants with threatening slogans.Applies your policy: allow/warn/age-gate/b... Read more

Pre-Visibility Control

Works on uploads, edits, thumbnails, and reels with real-time checks. Returns boolean, policy-linked outcomes and evidence logs Sh... Read more

How our Moderation Works

Mediafirewall AI scans text within images in real time, blocking violent or obscene language to prevent harmful content from reaching users.

Why Mediafirewall AI’s Violent and Obscence Text on Image Filter?

Inappropriate text on images can harm user trust and brand integrity. This filter catches it instantly—keeping your platform safe, respectful, and protected.

Instant Protection Across Every Text Field

From bios to product listings and live chat unsafe language is stopped instantly... Read more

Fully Configurable Policy Settings

Customize what counts as 'inappropriate' across languages, regions, and platfor... Read more

Reduces Escalations and Manual Review

With fewer violations reaching users, trust & safety teams spend less time triag... Read more

Auditable for Legal and Policy Teams

Every block is traceable supporting transparency, compliance, and evidence-led e... Read more

Violent and Obscence Text on Image Filter FAQ

It’s a tool that finds bad words, slurs, sexual text, and violent threats written inside images (memes, thumbnails, screenshots) before they go live.

It uses OCR to read tiny, curved, distorted, low-contrast text and leetspeak (like f*ck, sh!t, b!tch) on clothes, signs, and backgrounds.

It blocks profanity, hate slurs, sexual language, and threats of harm or self-harm, plus weapon/assault references shown as text in images.

Images with obscene or violent text hurt user safety and brand trust. Real-time AI moderation keeps profiles, feeds, and thumbnails safe and compliant.

Before posting by default at upload, on edits, and when thumbnails change. Harmful images are stopped before users see them.

Often in memes, t-shirts/hoodies, protest signs, room décor, stickers, image captions, and edited thumbnails used for shock or clickbait.

The system is policy-aware. It can allow, warn, age-gate, limit reach, or block based on your rules and the context.

Each decision has a clear result, reason, time, and evidence snapshot. Logs support online-safety, ad-policy, and regional rules.