DISTINGUISHED PERSONALITY ABUSE PROTECTION FILTER

DISTINGUISHED PERSONALITY ABUSE PROTECTION FILTER

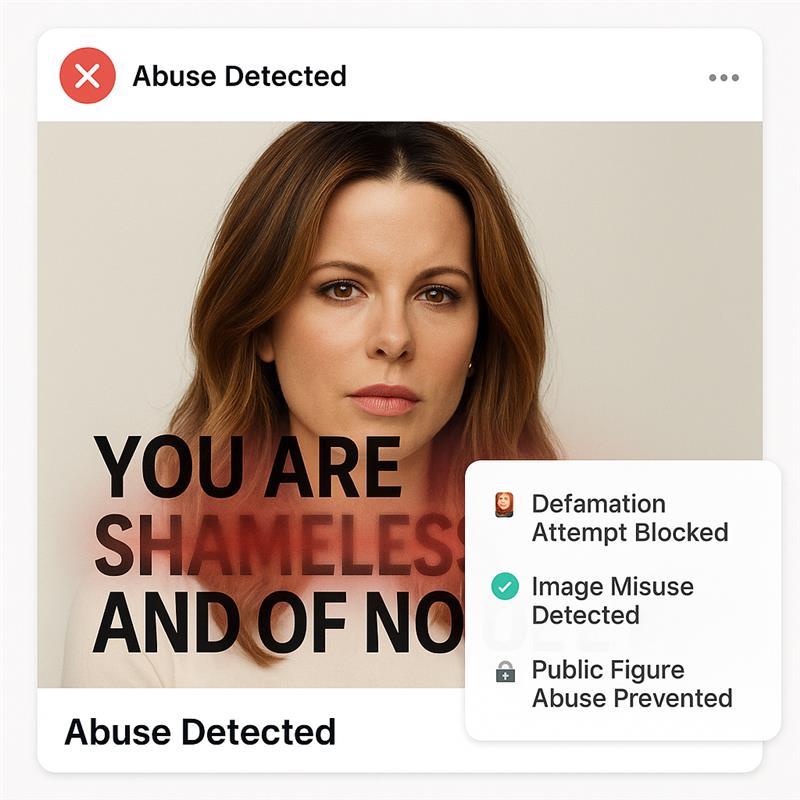

Stop Abuse, Harassment & Defamation Toward Public Figures with Real-Time AI

Mediafirewall AI’s Distinguished Personality Abuse Protection Filter detects abusive text and visuals targeting public figures. It flags slurs, threats, violent references, and defamatory memes in posts, images, and videos before they go live. Context rules handle satire/news while blocking harassment and coordinated pile-ons. Protect reputations, reduce harm, and keep communities safe with audit-ready actions Shape

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What is Distinguished Personality Abuse Protection

Targeted-Abuse Guard

Finds slurs, insults, and harassment aimed at public figures, creators, and well-known personalities. Blocks pile-ons and brigadin... Read more

Violence & Threats Check

Detects threats, violent fantasies, and call-to-harm in text and on images/memes. Stops escalation and protects community safety.

Defamation & False Claims

Flags defamatory text overlays, fake quotes, and doctored posters.Routes to block / escalate with evidence for review.

Cross-Media Detection

Analyzes text, images, and video frames together (OCR + vision + NLP).Catches abuse hidden in thumbnails, captions, and stickers.

Context & Policy Aware

Distinguishes news/satire from abuse using policy rules.Returns Allow / Warn / Limit / Block / Escalate with clear reasons and log... Read more

How our Moderation Works

AI scans content instantly to detect and block nudity and explicit content.

Why use MediaFirewall.ai's Distinguished Personality Abuse Protection

Simply put, our filter is the best value for money out there

Operational Efficiency at Scale

Automate detection of impersonation, defamation, and visual misuse of public fig... Read more

Real-Time Livestream Coverage

Identify and block abusive content involving prominent personalities in livestre... Read more

Seamless Platform Integration

Deploy effortlessly via API or SDK. Customize filters by region, profile type, o... Read more

Built for Policy-Driven Enforcement

Enable tailored abuse detection for public figures across countries and categori... Read more

Distinguished Personality Abuse Protection Filter FAQ

It detects abuse, harassment, threats, and defamatory content aimed at public figures and well-known personalities in text, images, and videos.

AI scans posts, comments, images, and clips using NLP, OCR, and visual analysis, then applies your policy before content is visible.

Abusive content against public figures harms user safety, brand trust, and community health; this filter blocks it at the source.

Yes. OCR + vision detect text on image, symbols, and layouts used to hide slurs, threats, or defamatory claims.

Context rules can allow, warn, limit reach, or escalate so fair criticism is treated differently from harassment.

Pre-visibility by default, and on edits or re-uploads. Livestreams receive continuous checks.

A boolean result with reason codes, timestamps, and evidence snapshots, ready for audits and appeals.

It reduces harmful exposure, supports policy enforcement, and provides audit-ready logs aligned with regional online-safety standards.