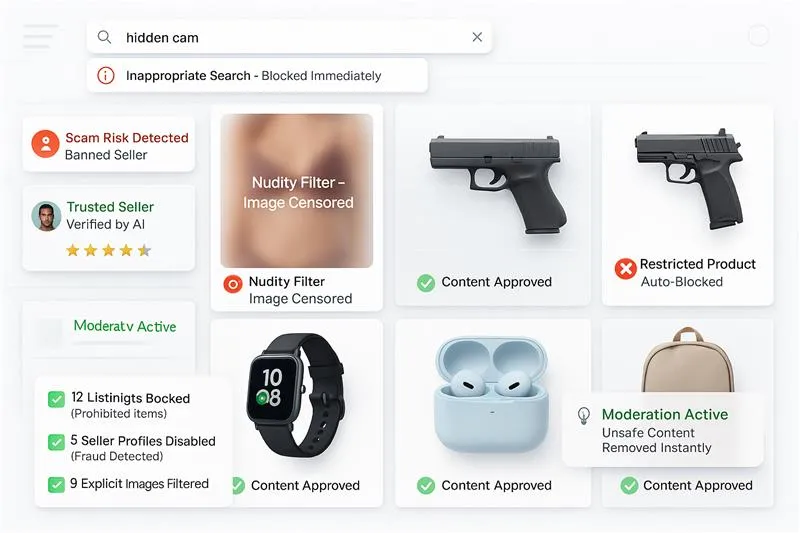

VIDEO MODERATION

Say Goodbye to Unsafe Video Uploads

AI video moderation to scan full videos, clips, and live streams for nudity, sexual content, violence, and unsafe visuals. It does frame-by-frame video content moderation, so risky scenes are flagged or blocked before users see them. Built for AI video moderation for dating apps, social platforms, and marketplaces that need safe, compliant video content.