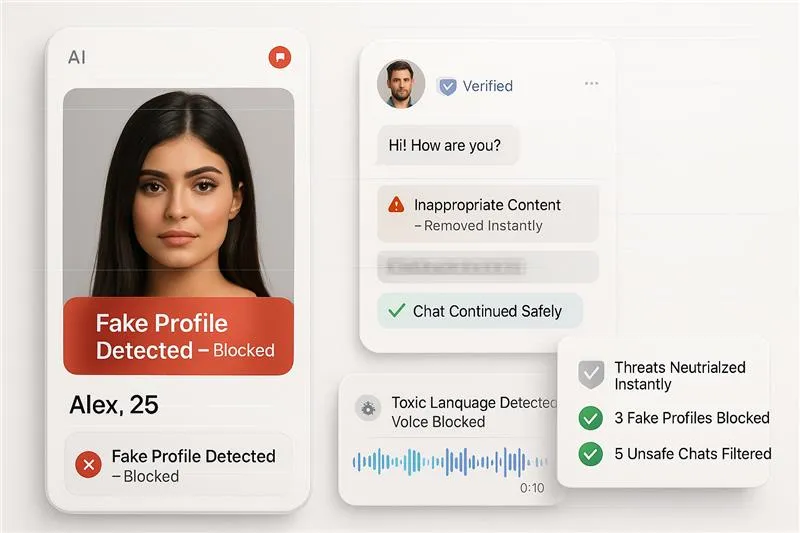

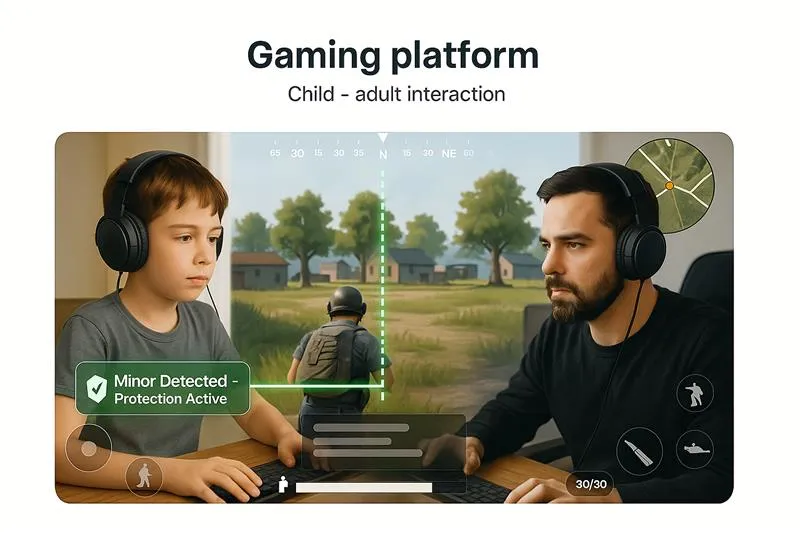

AUDIO MODERATION

Stop Abuse, Explicit Audio, and Threats in Voice Chats with Real-Time Moderation.

AI audio moderation to scan voice notes, voice calls, and live streams for hate speech, abuse, and sexual content. It does real-time audio moderation to flag, mute, or block harmful voice content before it reaches other users. Built for safe voice chat moderation for dating apps, social media, and gaming platforms that must protect users.