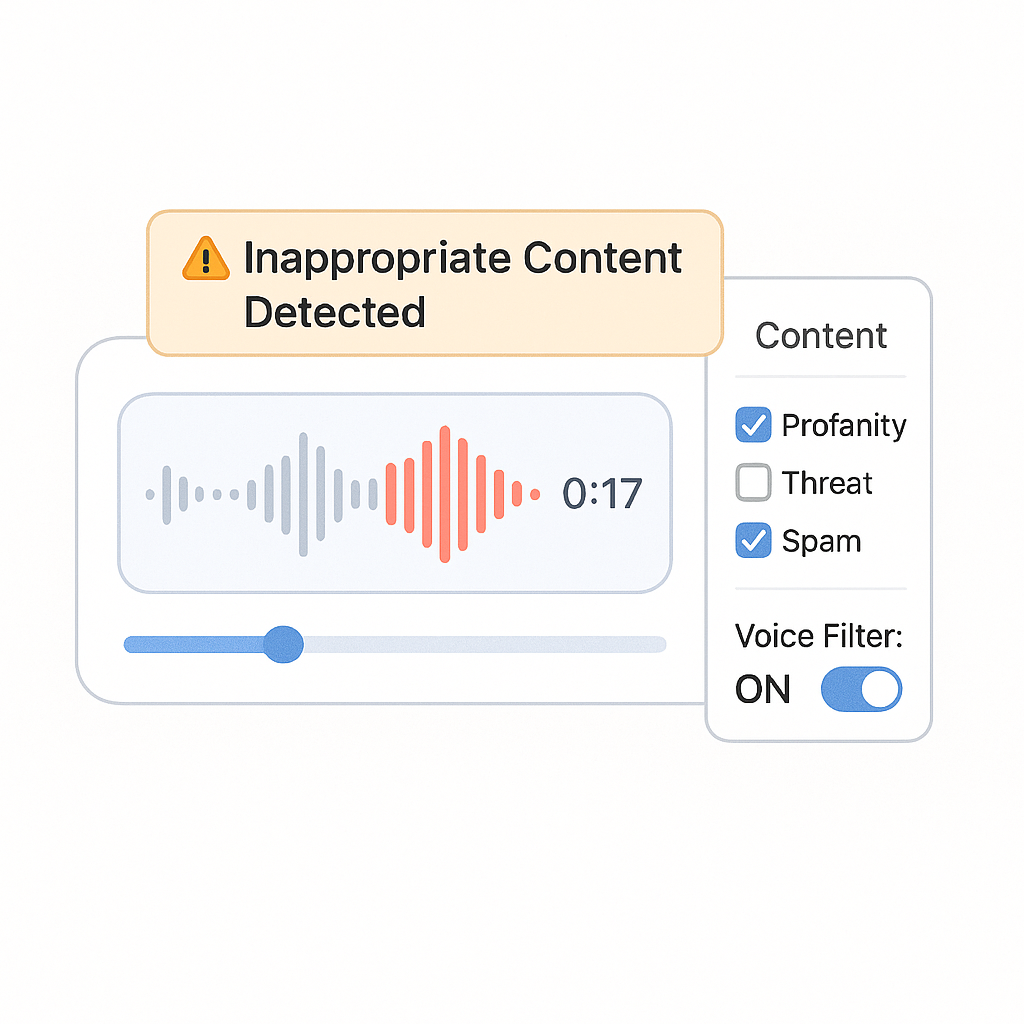

INAPPROPRIATE & ABUSIVE VOICE FILTER

INAPPROPRIATE & ABUSIVE VOICE FILTER

Stop Profanity, Harassment, Sexual Grooming & Violent Speech in Real Time

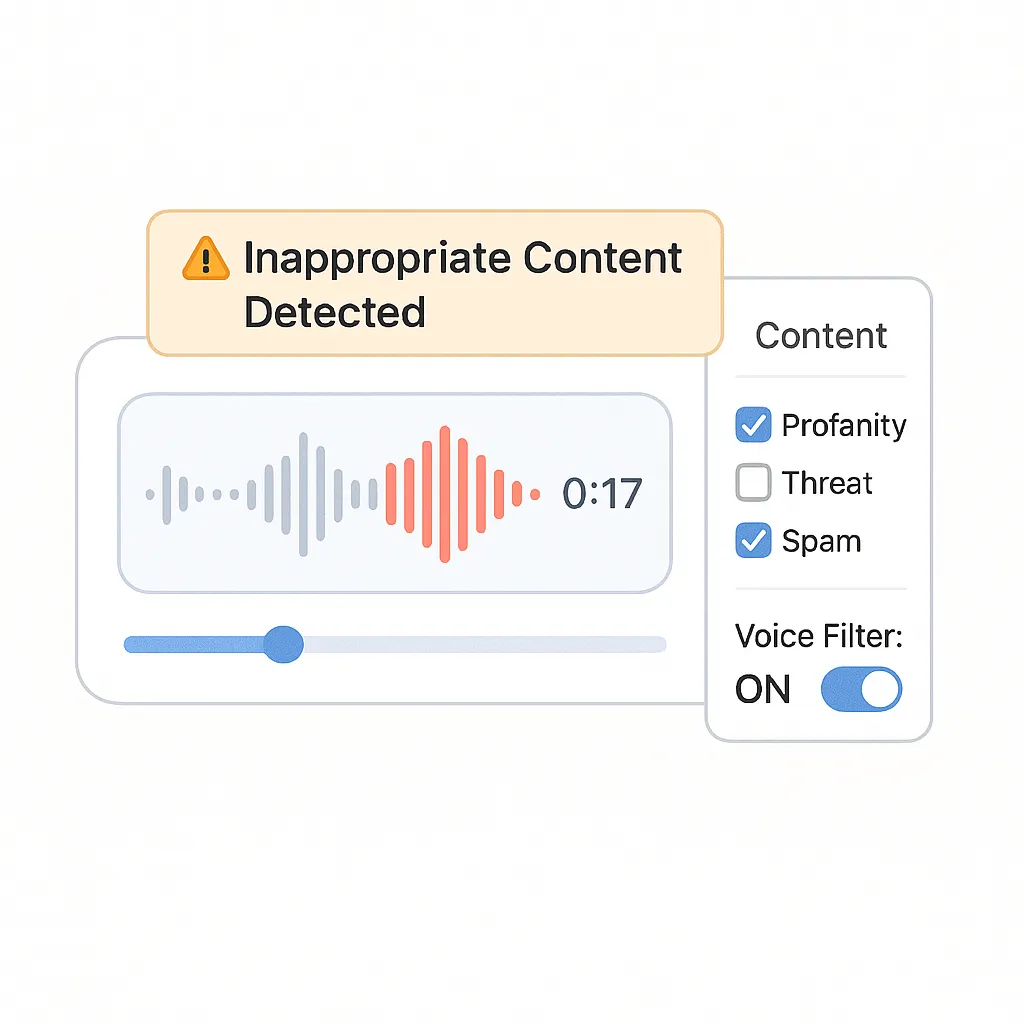

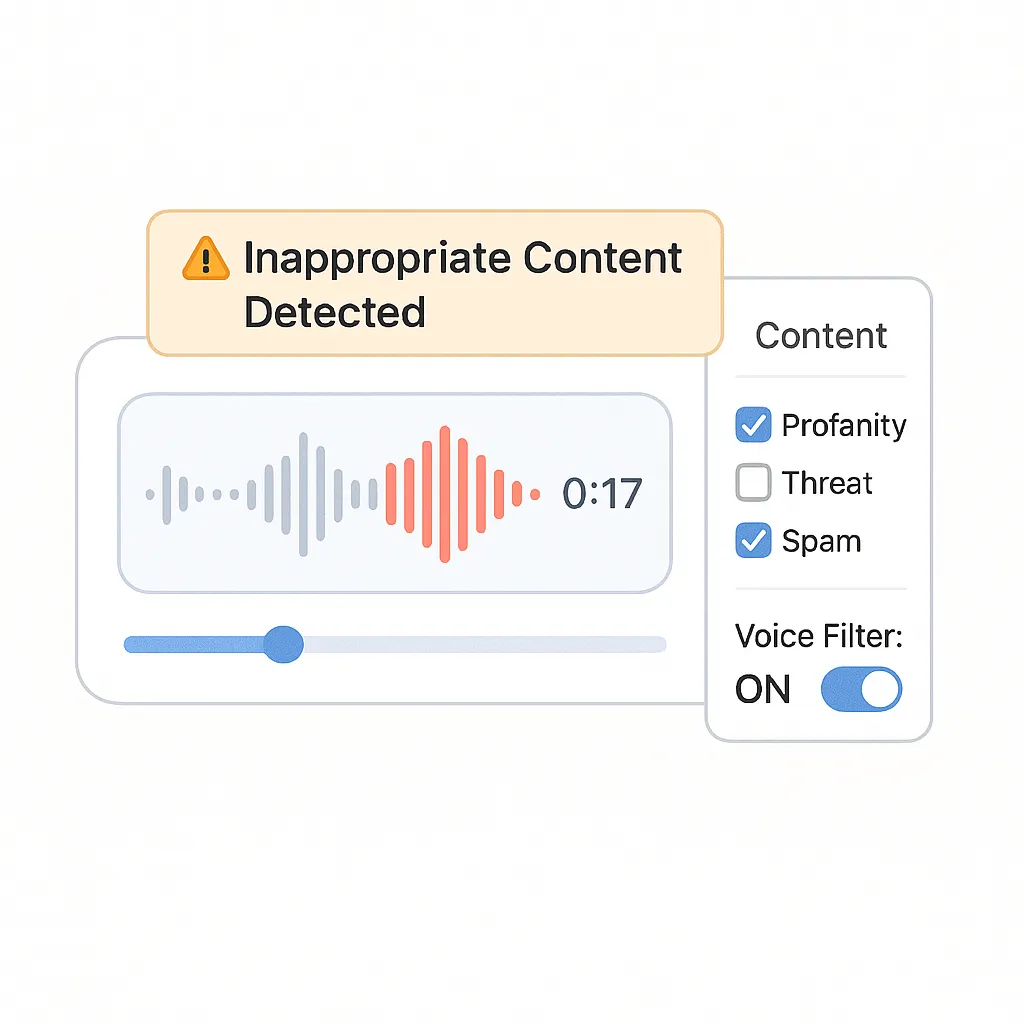

Mediafirewall AI’s Inappropriate & Abusive Voice Filter moderates live and recorded audio. It detects profanity, slurs, sexual grooming, harassment, and incitement before users hear it. ASR + intent models handle slang, code words, and multilingual abuse. Protect communities, reduce harm, and stay compliant with audit-ready actions.

Supported Moderation

Every image, video, or text is checked instantly no risks slip through.

What is the Inappropriate & Abusive Voice Filter?

Works Without Transcripts

Detects tone, aggression, and abuse directly from raw audio no dependence on voice-to-text.

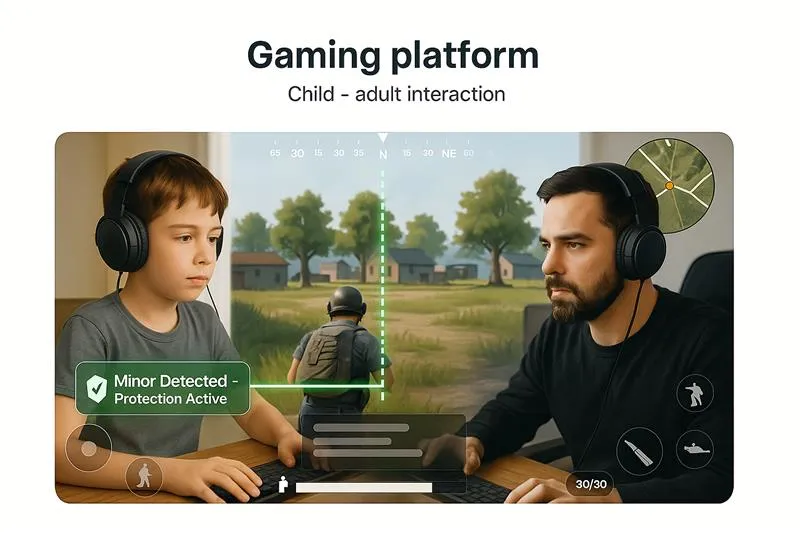

Supports Multi-Speaker Environments

Handles group calls, voice rooms, and multiplayer streams even with overlapping conversations.

Language and Accent-Agnostic

Trained on global voice data to detect violations across dialects, slang, and pronunciation.

Use Case-Aware Enforcement

Audio policy adapts to platform type be it dating voice calls, or open mic forums.

Built for High-Concurrency Platforms

Moderates thousands of concurrent streams without degrading quality or needing moderator staffing.

How our Moderation Works

Mediafirewall AI processes voice in real time. As users speak, the AI evaluates tone, content, and pattern for risk flagging or stopping harmful voice input before it’s broadcast or stored.

Why Mediafirewall AI’s Inappropriate & Abusive Voice Filter?

When harmful voice content slips through, it puts trust and user safety at risk. This filter catches abusive or inappropriate speech in real time—safeguarding platforms, communities, and brands with accurate, AI-driven detection.

No Transcription Dependency

Filters based on audio signals not delayed transcripts or post-event reviews.

Voice Safety at Platform Scale

Built to moderate live conversations across 1:1 call, group chats, and live broa... Read more

Policy Match, Not Just Word Match

Understands verbal aggression, hate tones, and coercive behavior even when disgu... Read more

Fast, Invisible, Compliant

Enforces voice moderation standards with no user disruption while keeping full a... Read more

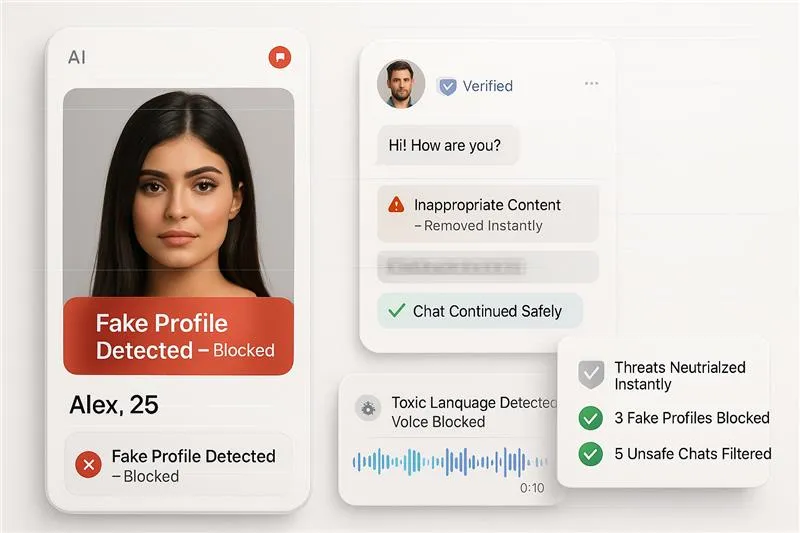

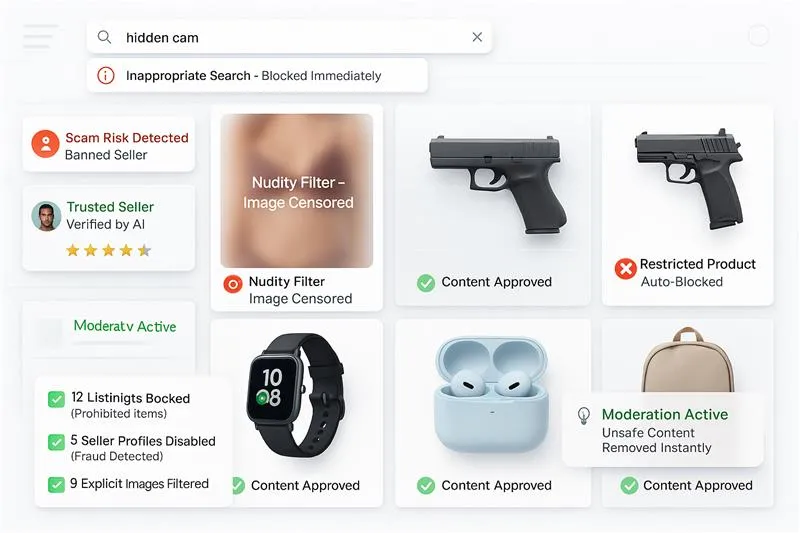

Related Solutions

Inappropriate & Abusive Voice Filter FAQ

It detects profanity, slurs, sexual content, grooming, harassment, threats, and incitement in live and recorded audio.

ASR (speech-to-text) plus intent models analyze words, tone, and patterns, then apply your policy before harmful audio reaches listeners.

Yes. The filter understands slang, leetspeak, accents, code words, and supports multilingual detection.

It flags sexual talk, grooming cues, and adult references in youth areas, returning mute/remove/escalate actions.

Live, with near-instant enforcement; recordings are also scanned pre-publish to keep libraries safe.

Context signals (speaker history, repetition, target mentions) and allow/deny lists reduce mistakes; edge cases escalate.

A clear result (Allow/Warn/Mute/Remove/Escalate), reason code, timestamp, and short evidence snippet for quick decisions.

We provide audit-ready logs (decision, reason, time, snippet) that align with online-safety and platform policies.